Generative AI has the potential to be disruptive – in both good and bad ways – for many professions, including PR. But what does that really mean?

When ChatGPT was released late last year, it quickly became the fastest new technology service to gain a million users, reaching that milestone in just 5 days. (For reference, Instagram, the next fastest, took 2.5 months; Twitter took 2 years.) Within 2 months ChatGPT was estimated to have an astonishing 200 million users. Why? For one thing, the technology seems almost magical; just type in questions or requests in plain language and get rich, detailed and nuanced answers, whole documents, even code. Superficially at least, the quality of the content created by Chat GPT seems to rival that produced by professional, human writers (more on that later).

The buzz over ChatGPT followed a similarly enthusiastic reaction earlier in 2022 to AI systems like DALL-E2, Midjourney and Stable Diffusion, which can produce visual content from text prompts, including art in the style of famous creators or photorealistic images. (The image at the top of this blog post is one example.) Now there are also audio and video generative AI systems that can create media that looks and sounds like actual, living people (sometimes doing and saying things they haven’t done or said in real life).

But for all the hype, ChatGPT and other generative AI platforms also struck a nerve. Is it crazy to think that AI is finally coming for your job? To many journalists – a profession that is already under assault with shrinking newsrooms and private equity-driven consolidation – generative AI is an ominous development. CNET and BuzzFeed, among others, revealed that they had been experimenting with using AI engines to write articles (albeit not without significant problems), raising questions about the future of traditional journalism.

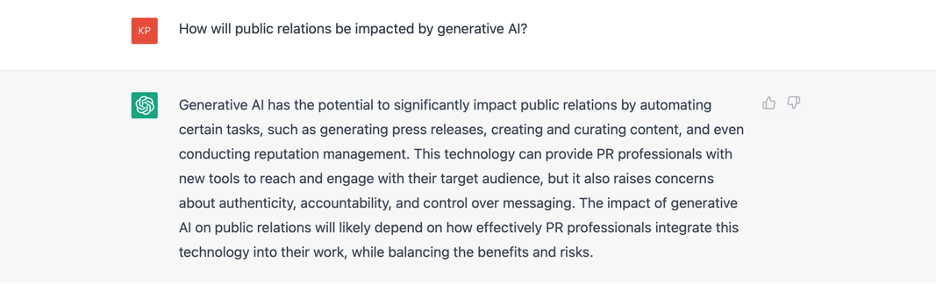

Given our focus on content, it seems reasonable to wonder about the implications for PR, too. To get a sense of what they might be, I figured I might as well go right the source and ask ChatGPT itself:

[source: OpenAI]

There are some plausible ideas there, but let’s dig a little deeper.

Writing press releases? The demise of the press release has been predicted for ages, yet they’re still used every day. But I doubt you’ll find too many PR pros who would be sad at turning that task over to our new robot overlords.

Creating other content? Perhaps, but the content created by these AI systems – while well-structured and grammatically correct – often lacks personality. It’s also hard for an AI to create original custom content around, say, a specific company’s products or services when it wasn’t trained using the company’s unique or proprietary information (and AI still struggles with context).

Another huge issue is that these AI systems present their responses as facts, without sourcing or attribution. With the rise of misinformation, the time spent to vet AI work can be considerable. In other words, there is content, there is factually correct content, and then there is great content… AI systems lack the ability to do the last two. Yet. Still, I wouldn’t be surprised if media outlets (mimicking schoolteachers) started using software to detect whether a contributed article has been written by an AI.

Reputation Management? Hmmm, now we’re getting into some interesting territory. In fact, this is an area where conversational AI is likely to create as much work for humans as it is to save it.

As I mentioned earlier, there have been some issues with how systems like ChatGPT and Google’s Bard respond to queries; in short they often give wrong answers. In part this is due to how large language models work. They don’t really “understand” what you’re asking or even what they’re saying to you, they’re just assembling information into a format that mimics human communication patterns. But it’s also a function of how these models are trained. In the case of ChatGPT, its training data is 570 GB of text information from books, forums and other sources found on the web (up to a specific point in time). And we all know how much incorrect information there is online.

If you want more information on the challenges of generative and cognitive AI, Gadi Singer at Intel has some interesting posts on Medium.

So, what happens when an AI starts spouting inaccuracies or made-up quotes about a company, product or person based on misinformation (or, more darkly, intentional disinformation) incorporated into its training data? There are other potential problematic PR issues with generative AI, like the sinister misuse of an AI generated video or audio clip of an individual (perhaps the CEO of a publicly traded company) saying something false or damaging. These sorts of incidents could create a lot of headaches – and work– for PR pros.

In fact, as these generative AI systems catch on, it’s not hard to envision companies and individuals feeling the need to “flood the zone” with even more authoritative content in an effort to indirectly improve the quality of AI training data sets. That will drive the need for more editorial coverage, more bylined articles, more contributions to online forums like Reddit – in short more of what we already do in PR.

That said, getting coverage may become harder if the more dystopian vision of AI-driven journalism comes to pass. How would you pitch a “reporter” that is actually an AI?

It’s important to remember that as a technology generative AI is still in its infancy. Those of us who work with in this field often overestimate the impact of new technologies in the short term and underestimate it in the long term. But one thing is certain: generative AI is not going away. As these systems inevitably get better, incorporate new training data more frequently, add cognitive capabilities, and potentially learn how to distinguish facts from mis- or dis-information, everyone – including PR pros – will have to learn how to live with them and use them effectively.